I spent the weekend at Citcon (Continuous Integration and Testing Conference) in Minneapolis. Citcon (which is pronounced Kitcon – because it sounds cooler) is an open spaces conference which means that there are no scheduled talks. The people who show up propose ideas and then they vote on what they want to see. It sounds like it would never work but it actually works very well. For instance, Toby Tripp proposed a session on testing smells and it got enough votes to get a session but when I showed up there were only 6 people. Which kinda bummed me out until we started talking, then I realized that we had exactly the right 6 people and another 20, 50, or 100 half interested dudes typing away on their computers really wouldn’t have added to the talk. As it was the talk was intimate and to the point.

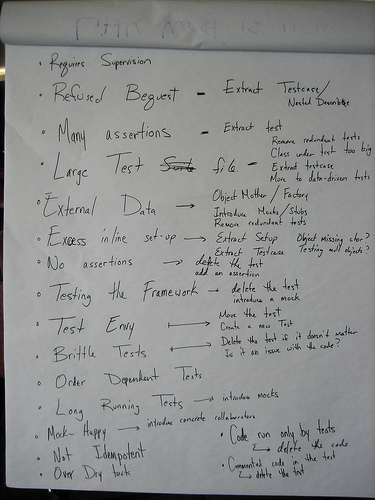

We came up with the following smells (a smell is a sign that something might be wrong – Fowler used this metaphor to great effect in his seminal book “Refactoring”):

Requires Supervision:

A test is not automatic – it needs user input to function. Someone has to watch the test and enter data periodically.

Solution: Don’t do that.

Refused Bequest:

A mega setup that some tests ignore, some use, and most rely on but only for a few lines. When any individual test fails it’s hard to parse the setup to see what’s going on.

Solution: In RSpec you can use nested describes/contexts to have mini setups for each situation. Or you can write private method setups that are more focused. Or you can create multiple test files for different contexts.

Many Assertions:

When a test tests way too much its failure is often hard to track down.

Solution: break the test up into smaller atomic chunks (test cases) that zero in on one or two things a method should do.

Large Test File:

Things can be hard to find in a large test file and its documentation value is lost.

Solutions: It could be the class under test is too big and needs to be broken up into smaller discrete bits. Or you may have redundant tests that should be removed. Maybe you need a lot of setup and could move to a data or factory driven test. Or you could extract testcases into separate files (or describe blocks) each with a specific purpose to organize things.

External Data:

A test that needs external data (fixtures, files, helper classes, etc.) in order to run can be hard to understand as the reader must file hop to get a clear picture.

Solution: Avoid this, if possible, by using standardized Object Mothers, mocks/stubs, and removing redundant tests.

Excess inline setup:

Too much setup in every test means the reader has to skip most of the test to get to the meat.

Solution: Extract to setup methods or different test files. Focus and organize your tests.

No Assertions:

A test that has no assertions only exists to lie about code coverage.

Solution: Don’t do that. Delete the test or add an assertion (mock expectations count as assertions).

Testing the Framework:

If you’re using a framework you pretty much have to assume it works. Testing it along with your other tests only clutters things up.

Solution: Delete the framework tests.

Test Envy:

A test that is really testing some other class than the one it is supposed to. A common example of this is testing model validations in the controller when they should be tested in the model test.

Solution: Move the test or delete it if it is redundant.

Brittle Tests:

Tests the break all the time when small things change undermine the importance of test breakage. Examples are testing that a greeting is inside an “h1” in a view or the exact wording of text on the screen.

Solution: Perhaps you don’t really need to assert that. Or it might be a sign of a deeper problem with your code when it’s tests are fragile.

Order Dependent Tests:

Tests that need other tests to run before them really screw things up when things get moved around.

Solution: Make sure your tests clean up after themselves or are reasonably isolated from the outside world.

Long Running Tests:

When the feedback loop of code, test, code gets too long it can encourage bad behavior.

Solution: Spend time reducing the time of your tests. Buy faster machines. Mock out expensive operations (such as database calls).

Mock Happy:

A test that needs 17 mocks to run can be quite fragile, resistant to change, and hard to understand.

Solution: This could be a sign that the code you are testing has too many dependencies. Or you could introduce concrete collaborators. Mock/Stub out that which you don’t have access to or is expensive, but the rest can be real models. Yes this makes your tests less unit-y, but it will make them more robust and easier to understand

Not Idempotent:

Fancy words meaning your test changes the state of something permanently and thereby introduces the possibility of order dependent tests.

Solution: Clean up after yourself.

Overly Dry Tests:

DRY (Don’t Repeat Yourself) is a good idea, but pulling out all repetition can lead to some very hard to understand tests.

Solution: Some moistness is alright if it helps the reader understand the intent of the test.

Code Run Only by Tests:

Dead code (never touched in production) is very confusing to the developer trying to understand the system. Tested dead code doubly so.

Solution: Delete the test and the code. You’ll still have it in source control if you ever need it in the future.

Commented Code in the Test:

Commented out assertions or setup may mean someone took a quick path to making the test pass.

Solution: Investigate if the code should be there. If there’s no reason for it, delete it.