On a long enough timeline it is inevitable that some business folk will ask you to make your application accept and work with ‘custom’ data even though that application is backed by a relational database. No problemo, you say and serialize that data into JSON (or yaml, xml, etc) in a column. All is well for a minute until these product people want to filter/search and sort on one of these custom attributes. Once again, not a problem, you simply promote the JSON field into a column* and the customer can manipulate tables of data as they like. It takes some development time, not terribly much, but it does take some. Now comes the curve ball: Customers want all their proprietary data fields to be able to sort and filter by default.

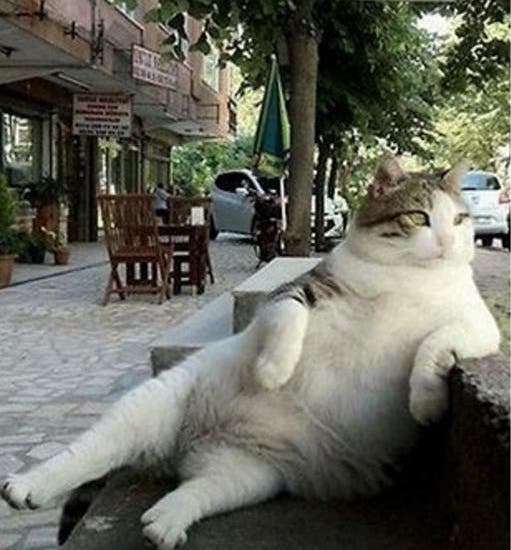

If you solve this problem yourself, inevitably you end up re-inventing the Entity Attribute Value anti-pattern. Basically, the main table links to another table or two that define a ‘custom’ field and its value. For example, the CHONKY_CATS table is linked to a CHONKY_CATS_VALUES table that references a row in the CHONKY_CATS_FIELDS table. This can also be done with one table that holds both attribute and value. The entity part of EAV is the reference to the ‘main’ table, btw.

Why is this an anti-pattern? First, it’s only an anti-pattern in relational databases. Datomic, for example, uses the EAV pattern wonderfully because it is designed from the ground up to embrace the concept. Relational databases, on the other hand, are very much not designed for such shenanigans. They work best when all the data for a given model are in one table. Any filter or sort based on a custom field contained in another table or two now needs a join or two. Clearly, this not going to perform at scale.

Buuuut, the customers really want it and other products support first class custom fields so despite the objections the word comes down that we are doing this. What are your options? Currently the only gem that supports EAV, has been updated in the last 3 years, and can be made to work with a modern ActiveRecord/Rails is ‘eav_hashes’. You’ll have to make some adjustments to the migrations to deal with some Rails 5 changes. Unfortunately, you are using Oracle and the ‘value’ column is a CLOB (Character Large Object) which are death in Oracle and definitely can’t be sorted on. Time to re-invent the busted wheel!

We now shift from the second person to my first person account of why I’m doing this in my application and how.

After objecting that we should just keep promoting JSON serialized fields to columns every 3-6 months as we have before, it was pointed out that our application doesn’t have to web-scale. Our app is only for managing large piles of assets (money in various states of liquidity) and therefore only ever has hundreds to thousands of concurrent users. What completely sunk my case for keeping the status quo was the fact that a much larger application in our company had implemented custom fields using an EAV type system.

Trying to find out the least bad way to do a bad thing is difficult in software. Any discussions online generally stop when someone points out that EAV in a relational db is bad (which is kinda why I’m writing this article).

After some thought we came up with two final contenders: the 2 table solution and the 8. The 2 table solution is fairly straight forward: A custom fields table and custom values table. The fields table stores the attribute’s name and type (we chose to support integers, floats, dates/times, and strings). The values table has 4 value columns, 3 of which are always null. We, unimaginatively named them integer_value, float_value, date_value, and string_value. Additionally there are 2 foreign key columns binding the table to a custom field and the original object table. Here’s an example:

| CHONKY_CATS | CHONKY_CAT_CUSTOM_VALUES | CHONKY_CAT_CUSTOM_FIELDS |

|---|---|---|

| id: 3 | string_value: null | id: 2525 |

| float_value: 9.9 | field_name: “Awesomeness rating” | |

| integer_value: null | field_type: “float” | |

| date_value: null | ||

| chonky_cat_id: 3 | ||

| chonky_cat_custom_field_id: 2525 |

Another way would be to have 8 tables. One set of field and value tables for each type we support:

| CHONKY_CATS | CHONKY_CAT_FLOAT_VALUES | CHONKY_CAT_FLOAT_FIELDS |

|---|---|---|

| id: 3 | value: 9.9 | id: 2525 |

| chonky_cat_id: 3 | field_name: “Awesomeness rating” | |

| chonky_cat_field_id: 2525 | field_type: “float” |

The other tables for integer, string, and date are just variations of the above. We tested the 8 table solution against the 2 table solution and with 10,000 records each the insert and query results only differed by a few percentage points. Thus, we decided the extra tables were not worth it.

We found out that we could use ORDER BY STRING_VALUE, INTEGER_VALUE, FLOAT_VALUE, DATE_VALUE because 3 of the 4 values are always null so they will be ignored, resulting in the proper sort. Our dba (database administrator) took a look at that, grimaced, and asked if we could please use the field type to have different types of specific sorts. We have reasonable dbas in our shop so we listen to them lest they leave and be replaced with scary dbas. And so we used the field type information to pick the correct sort. Here’s an example of one of the 4 sorts:

def self.custom_sort_by_float sort_by, sort_order

joins( chonky_cat_custom_values: :chonky_cat_custom_field).

where( "chonky_cat_custom_fields.field_name = ?", sort_by).

order( "chonky_cat_custom_values.float_value" => sort_order)

end

```

Currently we don’t use a loop and define_method to make all 4 happen in a more DRY fashion. I might; I’m not sure if it’s worth the obfuscation.

However, combining 2 queries about 2 different custom fields was getting me zero results even though I knew there were rows that match. This was my naive SQL:

select * from CHONKY_CATS

join CHONKY_CAT_CUSTOM_VALUES

on CHONKY_CATS.id = CHONKY_CAT_CUSTOM_VALUES.chonky_cat_id

join CHONKY_CAT_CUSTOM_FIELDS

on CHONKY_CAT_CUSTOM_VALUES.chonky_cat_custom_field_id = CHONKY_CAT_CUSTOM_FIELDS.id

where CHONKY_CAT_CUSTOM_FIELDS.field_name = 'hair style'

and CHONKY_CAT_CUSTOM_VALUES.string_value like '%hawk%'

and CHONKY_CAT_CUSTOM_FIELDS.field_name = 'eye color'

and CHONKY_CAT_CUSTOM_VALUES.string_value like '%blu%';

The problem is that this query is looking for instances where CHONKY_CAT_CUSTOM_FIELDS.FIELD_NAME are simultaneously ‘hair color’ and ‘eye color’. Which can not happen in the same row. Same issue with field_value: It can not be 2 things at once. What is needed are some join aliases:

select * from CHONKY_CATS

join CHONKY_CAT_CUSTOM_VALUES cccv on CHONKY_CATS.id = cccv.chonky_cat_id

join CHONKY_CAT_CUSTOM_FIELDS cccf on cccv.chonky_cat_custom_field_id = cccf.id

join CHONKY_CAT_CUSTOM_VALUES cccv2 on CHONKY_CATS.id = cccv2.chonky_cat_id

join CHONKY_CAT_CUSTOM_FIELDS cccf2 on cccv2.chonky_cat_custom_field_id = cccf2.id

where cccf.field_name = 'hair style'

and cccv.string_value like '%hawk%'

and cccf2.field_name = 'eye color'

and cccv2.string_value like '%blu%';

Now that the SQL has 2 groups of joins with aliases the query is no longer insisting that the same column in a row have 2 different values. Which means we need a unique alias for each extra custom field query we want to add. The issue of keeping these aliases unique can be solved by having the code assembling the query use a sanitized version of the field name. Here’s an example of a filter that will work with any string custom field associated with CHONKY_CATS:

def self.filter_by_custom_value field_name, partial_value

cccf = ChonkyCatCustomField.find_by_field_name field_name

raise "Could not find a field named [#{field_name}]" unless cccf

raise "Only works with strings not [#{cccf.field_type}]" unless cccf.field_type == "string"

shortened_field_name = field_name.

gsub(/\W+/, '_')[0...24] # Sanitize and avoid 30 character limit on Oracle XE leaving room for prefix

base_table_to_value_join = "value_#{shortened_field_name}"

value_to_field_join = "field_#{shortened_field_name}"

joins(

"join CHONKY_CAT_CUSTOM_VALUES #{base_table_to_value_join} on " +

"CHONKY_CATS.id = #{base_table_to_value_join}.chonky_cat_id",

"join CHONKY_CAT_CUSTOM_FIELDS #{value_to_field_join} on " +

"#{base_table_to_value_join}.chonky_cat_custom_field_id = #{value_to_field_join}.id").

where("lower(#{base_table_to_value_join}.string_value) like :partial_value",

partial_value: "%#{partial_value.downcase}%").

where("#{base_table_to_value_join}.field_name = :field_name",

field_name: field_name)

end

# In Rails/ActiveRecord there is currently no way to name the joins

# so we had to write all our joins as SQL snippets.

Given that we need 4 joins to query 2 custom attributes, one can see why this idea is not great. In our case, customers don’t often chain queries beyond 2 at a time (if even that) AND most of the fields in a given table will be actual columns so we are betting we can get away with this. I’ll try to do a follow up article when we have some real world results.

*I know many databases now support JSON queries (including Oracle) but, for various reasons internal to our infrastructure, we chose not to go that route.